The AI Assistants Can All Go to Hell

We seem to be past the point of no return with these pesky little bots and dear god, I hate it here.

There are few things in life that repel me the way artificial intelligence does. Not that it’s novel for me to detest it—far from it. AI is actively helping to destroy our world in many ways: mass misinformation, environmental ruin, stripping us of our humanity, labor theft (or not?) etc. We all know this. But there’s a particular shape to the ire that is distinct in my personal bouquet of enemies. There’s a resignation, a queasiness, and an insistence on refusing to look directly at it, while seeing it very clearly all the time.

As with many forms of anger, I’ve found ways to compartmentalize and channel my feelings to help manage them. It helps to have a target when you’re looking to fire, after all. And as such, I’ve decided that the damage I find most personally catastrophic about AI is the way that it has singlehandedly ruined my previously beloved sparkle emoji.

I’m not the first to note this, but my god, what a loss. This ✨lovely ✨bit of graphic art used to add a sweet, gentle, ephemeral, cosmic sheen to a conversation. Now when I see those pointed symbols of varying configurations and sizes, I simply want to run away. The red baseball cap of the computer graphics world, you might say. It sucks, but if there’s a silver lining to be found in this identifier, it’s that it makes it easy to flag things I want to stay away from, which is something I’ve gotten decently good at.

In the last few years, as AI transformed from the stuff of bad Steven Spielberg movies to a looming-yet-vague threat to an everyday part of our lives, I’ve developed a strong aversion and some coping mechanisms to go along with it. I don’t engage with ChatGPT or OpenAI, and I’m avoidant probably to the point of being rude when my loved ones try to joke with me about funny prompts they’ve fed into these systems. I don’t blame them individually or believe in the personal responsibility myth when it comes to climate change, which is why I largely avoid replying with a reminder that their goofs are killing the planet. Life is a constant state of cognitive dissonance right now. We’re all making choices. Still, I stay away as much as I’m able. I don’t care if this is our future. It doesn’t mean I have to embrace it.

Virtually running away used to be relatively easy. In fact, my bubble was largely intact until the last few months or so, when AI assistants started fully taking over our lives like a quiet, pixelated cockroach infestation. We were very used to the likes of Siri and Alexa, but now these featureless and eager-to-please servants have infiltrated even the sacred (and yes, inherently compromised) halls of Zoom, Gmail, Instagram, and so much more. I am one of the relatively lucky people who earns my livelihood through a laptop, which means that everywhere I look now, and in every virtual space I occupy, I see those sparkles telling me that I’m not alone. It’s a reminder that these locales were never ours to begin with (there is literally no such thing as pure autonomy online) but also that they are increasingly subject to more and more advanced ways of taking things from us and offering very little in return.

Part of what is so evil about these assistants is how they just appear out of nowhere. Google’s recent Gemini rollout was one of the more startling examples of the presumed (aka forced) consent of users and an unintuitive disabling process that most users will never act on. It’s such a hellscape, all hiding behind those friendly, sparkly icons.

As I’ve stewed over this development during the last few weeks, I thought a lot about Clippy, the widely mocked Microsoft Office virtual assistant with googly eyes and a paperclip body. Microsoft rolled Clippy out in the ‘90s and I feel safe in saying that he (she? they? it?) got more mileage as a watercooler topic than an actual assistant. Clippy was there as a visual representation of the help search bar, but people mostly saw it as an intrusion, and a dorky one at that.

Let’s have a little worlds colliding moment here and look at a portion of the 2025 AI overview in Google about Clippy:

Like sure yeah, that sums it up in a very basic way. But of course it doesn’t get at why Clippy had cultural staying power. That’s not what AI is for. It can’t provide nuance or a feeling, and it definitely can’t explain why something is stupid or deranged or endearingly stupid or deranged. It doesn’t feel a sense of why a meme becomes a meme, even if it can identify one as such.

I can imagine the argument that the AI assistants of today are simply the next generation of Clippy, but I didn’t want it then and I don’t want it now. Beyond that, Clippy was far less nefarious than what we’re dealing with today. The lines between virtual help, machine learning, and AI more broadly are blurry in the minds of consumers by design. The more we look at these tools as helpful and not as data mining and surveillance operations with deeply scary implications, the less likely we are to fight it as we’re being conscripted into aiding the building of our own dystopia. Convenience is a very friendly foe—we’re inclined to opt for it over imagination and human connection, even as the greater costs become well known.

I might be kicking and screaming about it, but I’ve largely accepted that the takeover of AI is happening whether we like it or not, and it will continue to disrupt the world we once knew in drastic and upsetting ways. Like Clippy and Gemini, it feels like it just showed up one day and will never leave. I don’t want their help and I don’t want to be part of their scheme, and I will fight my enlistment in this project as much as I am possibly able. Mrs. Dalloway said she would write the email herself, goddamnit. Couldn’t have made that stupid joke, Gemini!!!!

It’s bad enough that we live in a time where the biggest losers in the history of the planet are taking over the government and the media, hoarding wealth, exploiting the planet and their employees, and aiming to ensure that there’s no chance they’ll ever have to answer for their crimes—material, moral, or otherwise. I don’t know what they’re seeking or why, but they have a bottomless pit of need and no conscience, and somehow that has led to little robots asking us how they can help, even when we don’t need any.

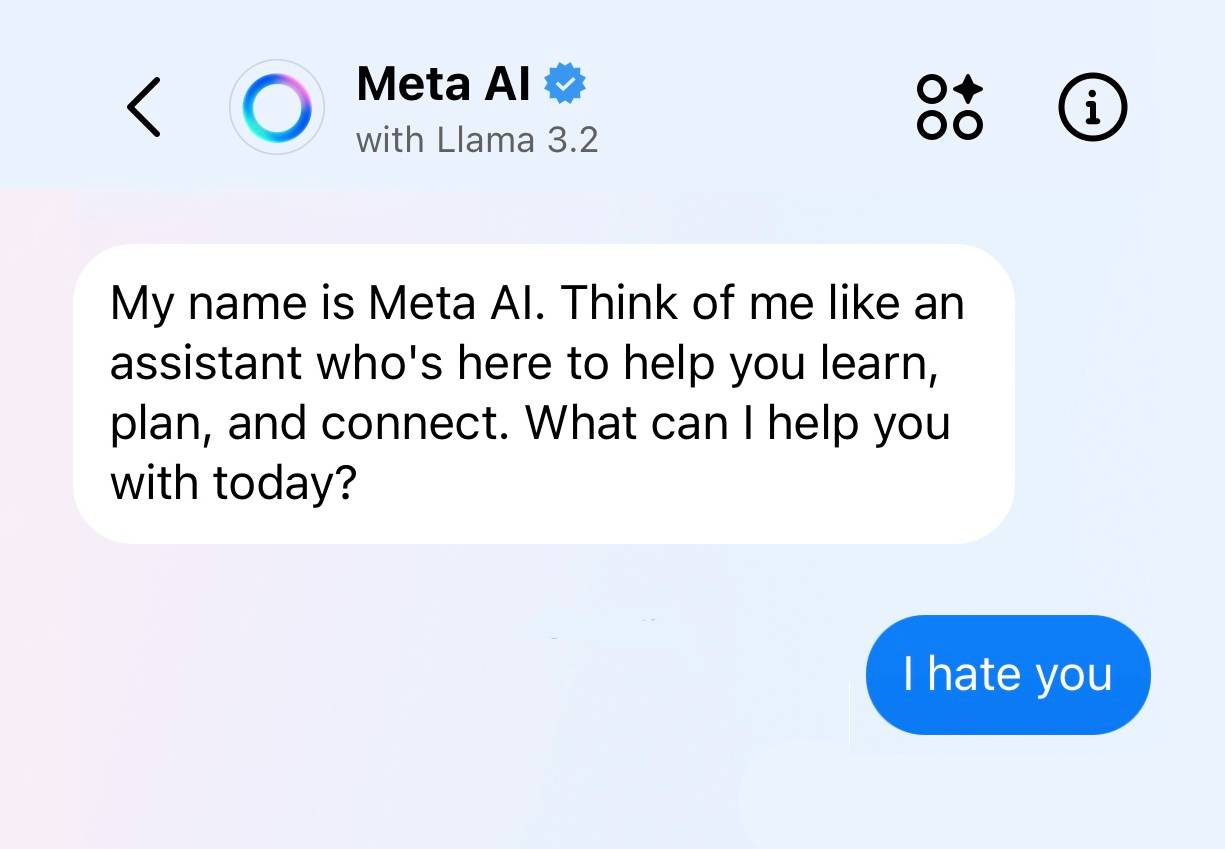

I want off this ride. I am already building up my defenses for a future in which AI is capable of making me feel bad for it, though frankly I’m not too worried about that with how things have been going lately. For now I’m just worried about billion dollar corporations quietly inserting malevolent software into my life when I just want to read an email from my mom. I wonder what Meta AI assistant might say if I asked it to help me destroy it, but I’ll hold off on that prompt for now. Maybe that’s a conversation we can take offline.

Probably a good place to mention that now when your Mac or iPhone does a software update it activates "Apple Intelligence" without telling you, even if you've turned it off before. Check your settings.

Thank you, Caitlin, for expressing quite eloquently what so many of us are feeling these days. As a small but I hope telling gesture against the Zeitgeist, I have made "Old Google" -- the original search service without ads or AI -- my default search engine. The procedure is quite simple, and can be found easily online. Up with the resistance!